Learning Portal - Evaluation basics

- Evaluation

- Evaluation

- Evaluation elements

- Performance Monitoring and Evaluation Framework (PMEF)

- Monitoring and evaluation framework

What is evaluation? How are evaluations structured? How is the CAP currently evaluated? Dive into the basics of CAP evaluation.

Page contents

Basics

In a nutshell

What is evaluation?

Monitoring, evaluating and auditing are three processes that are often misunderstood and commonly mixed together. Monitoring and evaluation are frequently presented together because they are two interlinked processes that share the same goal, but they are still fundamentally different processes – both in scope and approach, as well as in who is responsible for conducting them.

Audits are official and independent inspections of an organisation’s accounts and performance. A financial audit examines whether the organisation's financial records are a fair and accurate representation of the transactions they claim to represent. A performance audit is an independent assessment of an entity's operations to determine whether specific programmes or functions are working as intended to achieve established goals.

Monitoring is an exhaustive and regular examination of the resources, outputs and results of public interventions. It is based on systematic information obtained primarily from operators in form of reports, reviews, balance sheets and indicators. Monitoring is generally the responsibility of the actors who implement policy measures.

Evaluation is the process of judging interventions according to their results, impacts and the extent to which they meet the needs they were aiming to solve. Evaluation helps to find out and understand to what extent and why the observed changes can be attributed to a policy’s interventions. For this, evaluations make use of not only monitoring data, but also many other additional sources, which can then be analysed using different quantitative and qualitative methods to gain deeper insights into programmes and their impacts. Evaluations can also include in-depth analyses or case studies of the situation to further understand a policy in a particular situation and context.

Who does it?

Evaluation is the responsibility of evaluators, who are independent from implementing bodies. As such, evaluation provides an ‘external view’ and distinguishes itself from self-assessment (self-evaluation) which is carried out by those who implement an intervention with a view to improving it.

Evaluation criteria

CAP evaluations look at five evaluation criteria of an intervention: effectiveness, efficiency, coherence, relevance and EU value added.

- Relevance analyses the extent to which the objectives and design of interventions remain relevant to the current and future needs and problems in the EU, including wider EU policy goals and priorities. Relevance assessment involves looking at differences and trade-offs between priorities or needs. It requires analysing any changes in the context and assessing the extent to which an intervention can be (or has been) adapted to remain relevant.

- Coherence can be understood as the extent to which complementarity or synergy can be found within an intervention and in relation to other interventions. Coherence analysis may highlight areas where there are synergies that improve overall performance, were not possible if introduced at the national level, or point to tensions, e.g. objectives that are potentially contradictory, overlapping or approaches that are causing inefficiencies.

- External coherence analyses the extent to which various components of different interventions affecting the same context operate together to achieve their objectives.

- Internal coherence analyses the extent to which various components of the same intervention operate together to achieve its objectives.

- Effectiveness can be understood as the extent to which the intervention achieved, or is expected to achieve, its objectives, including any differential outcomes across groups. Effectiveness can provide insight into whether an intervention has attained its planned or intended outcomes, the process by which this was done, which factors were decisive in this process and whether there were any unintended effects. The evaluation should form an opinion on the progress made to date and the role of the EU action in delivering observed changes.

- Efficiency is the extent to which the intervention achieved, or is expected to achieve, its objectives in an economic and timely manner. ‘Economic’ is the conversion of inputs (funds, expertise, natural resources, time, etc.) into outputs, results and impacts in the most cost-effective way possible. This may include assessing operational efficiency (i.e. how well the intervention was managed). Efficiency analysis should explore the potential for simplification and burden reduction by measuring administrative and regulatory burdens.

- EU value added looks for changes due to EU intervention regarding what could reasonably have been expected solely from national actions by Member States. In policy areas where the EU has non-exclusive competence, the analysis of EU added value should explore the actual application of the subsidiarity principle by assessing the added value of EU action compared to that of other actors.

Elements of an evaluation system

Defining the elements

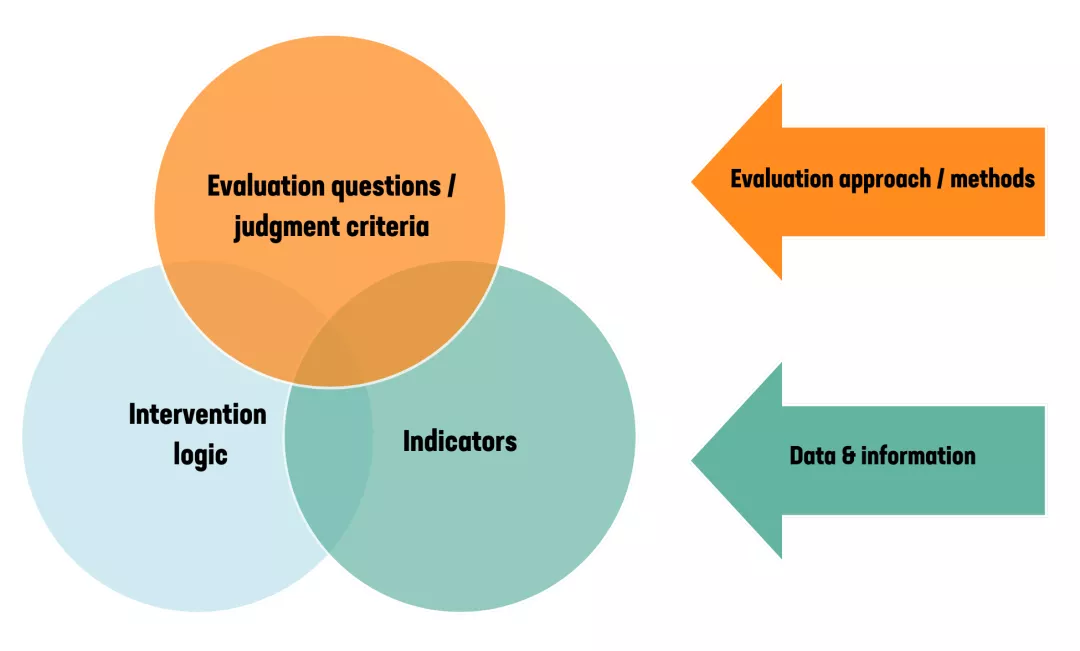

An evaluation system is composed of the following core elements: an intervention logic, evaluation questions and corresponding judgement criteria (or factors of success), and indicators.

-

Venn diagram

Evaluation questions / judgement criteria

< Evaluation approach / methods Intervention logic

Indicators

< Data and information

Intervention logic

The intervention logic provides a (narrative) description and is usually displayed as a diagram summarising how the intervention is expected to work. Put another way, it describes the expected logic of the intervention or chain of events that should lead to an intended change. An intervention is expected to be a solution to a problem or need – the intervention logic is a tool that helps to explain (and often visualise) the different steps and actors involved in an intervention and their dependencies – thus presenting the expected ‘cause and effect’ relationships. The intervention logic serves as the foundation for evaluations.

Evaluation questions and judgement criteria

Evaluation questions define the key areas to assess in relation to policy objectives. Questions should be worded in a way that forces the evaluator to provide a complete, evidence-based answer that improves understanding of the performance of an EU intervention against the five evaluation criteria.

Each evaluation question should be associated with one or more judgement criteria (factors of success). These criteria are used to define what success would look like in relation to the evaluation question. They also serve as the basis for identifying the indicators that can be used to measure the extent of achievements and, hence, answer the evaluation question.

Indicators

An indicator is a tool to measure the achievement of an objective (e.g. a resource mobilised, an output accomplished or an effect obtained). Indicators also serve to describe the context (economic, social or environmental). The information provided by an indicator is used as a measurement tool. Indicators are aggregates of data that allow for quantification (and simplification) of a phenomenon. Indicators are the measurement tools to collect evidence for all evaluations.

Bringing it all together

To perform evaluations, these three elements must be nurtured with different evaluation approaches, methods, data and information.

An evaluation approach is a way of conducting an evaluation. It covers the conceptualisation and practical implementation of an evaluation in order to produce evidence on the effects of interventions and their achievements. Evaluation methods are types of evaluation techniques and tools that fulfil different purposes. They usually consist of procedures and protocols that ensure consistency in the way each evaluation is undertaken. Evaluation approaches and methods help to attribute the effects and impacts of a specific intervention, thus helping policymakers understand its real value.

Data, data, data

Data is quantitative information on selected indicators or variables that can be collected from the source itself (e.g. through surveys, monitoring and statistics of entities) or secondarily through pre-existing sources (studies, aggregated statistics, etc.). Qualitative information can also be gathered to provide context for the evaluation and be collected primarily from various stakeholders (intervention managers, beneficiaries, etc.) while using various qualitative or mixed methods (surveys, case studies, focus groups, interviews etc.). Data and information represent the evidence for the evaluation.

Consistency

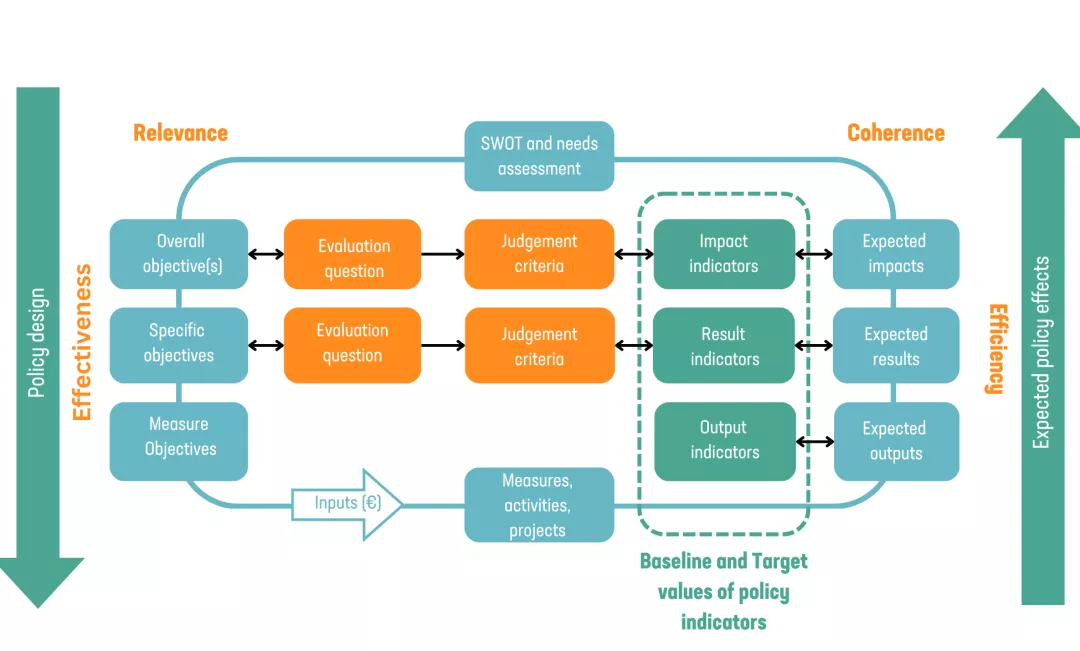

The following figure shows the consistency links between the intervention logic and other evaluation elements, questions and indicators. These must be decided prior to the start of an evaluation. The follow figure shows the generic intervention logic for Rural Development Programmes in the 2014-2022 period.

-

The intervention logic of the Rural Development Programmes 2014-2022 shown in the figure begins with identifying needs through a SWOT analysis and setting a hierarchy of objectives (overall, specific, measure objectives). Policy interventions (measures, activities, projects) are then designed and implemented using allocated financial inputs (Euros). The effectiveness, efficiency, relevance, and coherence of these interventions are evaluated using the defined evaluation elements (related evaluation questions, judgment criteria, and indicators including target values). The expected policy effects are assessed through the comparison of expected versus realised outputs, results, and impacts.

The blue boxes show the relationship between the needs identified in the SWOT analysis and the needs assessment (formulated as objectives), actions (e.g. measures, activities and projects) supported by a budget that will be undertaken to achieve objectives and expected effects. These blue boxes make up the intervention logic. The expected effects are defined as outputs, results and impacts:

- Outputs are actions financed and accomplished with the money allocated to an intervention. Outputs may take the form of facilities or works (e.g. building of a road, farm investment or tourist accommodation). They may also take the form of immaterial services (e.g. trainings or consultancy).

- Results are the direct advantages/disadvantages that beneficiaries obtain at the end of their participation in a public intervention or as soon as a facility funded through an intervention has been completed. Results can be observed when a beneficiary or operator completes an action and reports on the way that allocated funds were spent and managed. This can take the form in many ways, such as reporting that accessibility has been improved due to the construction of a road or that the firms that have received advice claim to be satisfied. These results should be regularly monitored. Policymakers can adapt the implementation of the intervention according to the results obtained.

- Impacts typically refer to the changes associated with a particular intervention over the longer term. Such impacts may occur over different timescales, affect different actors and be relevant at different scales (e.g. local, regional, national and EU levels).

The orange boxes are evaluation questions, which are usually cause-effect questions asking for net effects and linked to objectives. Evaluation questions might be further specified with judgment criteria which define how the success is to be achieved under each objective (overall and specific). They link objectives to indicators.

The green boxes are indicators, which should be consistent with the judgment criteria or evaluation questions and with the expected effects (i.e. outputs, results and impacts).

Several types of indicators can be used:

- Output indicators measure activities directly realised within a programme. These activities are the first step towards realising the operational objectives of an intervention and are measured in physical or monetary units (e.g. number of training sessions organised, number of farms receiving investment support or total volume of investment).

- Result indicators measure the direct and immediate effects of an intervention. They can provide information on changes in, for example, the behaviour, capacity or performance of direct beneficiaries and are measured in physical or monetary terms (e.g. gross number of jobs created and successful training outcomes). Result indicators are used to answer the specific objectives related to evaluation questions.

- Impact indicators refer to the outcome of interventions beyond the immediate effects. Impact indicators are normally expressed in ‘net’ terms, which is realised by subtracting the effects that cannot be attributed to an intervention itself from the total and accounting for indirect effects (e.g. total factor productivity in agriculture, emissions from agriculture, water quality and degree of rural poverty). Impact indicators measure a programme’s effects on the area in which it is implemented. Impact indicators are used to answer evaluation questions related to overall objectives.

Target values are often assigned to indicators. These targets are values established based on existing information that programmes try to realise to achieve the expected effects, objectives and needs.

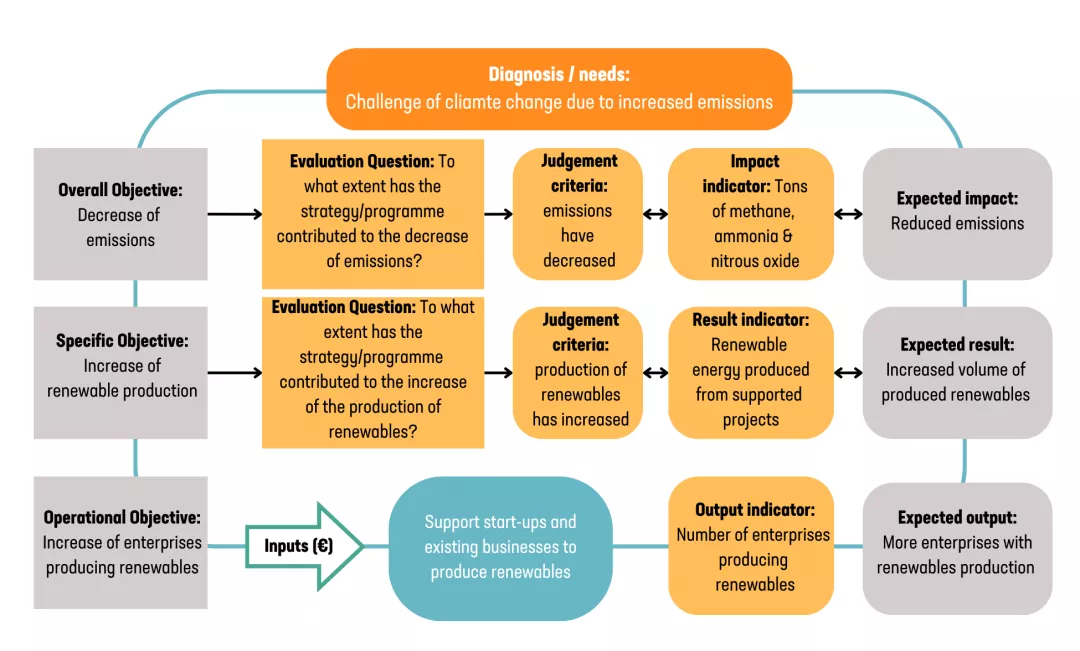

The following figure shows an example of consistency interlinkages between the different evaluation elements of a hypothetical policy programme designed to address the problem of emissions from agriculture.

-

The figures show an example of a specific intervention logic for RDP interventions, which aims to address climate change by focusing on decreasing emissions from agriculture and increasing renewable production. Financial inputs (Euros) support start-ups and existing businesses to produce renewables.

The operational objective is to increase the number of enterprises producing renewables. The output indicator is the number of enterprises producing renewables, and the expected output is more enterprises involved in renewables production.

The specific objective is to increase renewable production. This is evaluated by asking whether the strategy or the programme has contributed to the increase of renewable production. The judgment criteria for success is that the production of renewables has increased, with the result indicator being the amount of renewable energy produced from supported projects. The expected result is an increased volume of produced renewables.

The overall objective is to decrease emissions. This is evaluated by asking whether the strategy or programme has contributed to the decrease of emissions. The judgment criteria for success is that emissions have decreased, with the impact indicator being the tons of methane, ammonia, and nitrous oxide reduced. The expected impact is reduced emissions.

Training materials

Key evaluation concepts for 2023-2027 based on Regulation (EU) 2022/1475 in relation to the evaluation of CAP Strategic Plans

(PPTX – 12.26 MB)